用Bi-GRU语义解析,实现中文人物关系分析 | 附完整代码

实验前的准备

双向GRU加字级别attention的模型想法来自文章 “Attention-Based Bidirectional Long Short-Term Memory Networks for Relation Classification” [Zhou et al.,2016]。这里将原文的模型结构中的LSTM改为GRU,且对句子中的每一个中文字符输入为character embedding。这样的模型对每一个句子输入做训练,加入字级别的attention。

def?__init__(self):

????self.vocab_size?=?16691

????self.num_steps?=?70

????self.num_epochs?=?10

????self.num_classes?=?12

????self.gru_size?=?230

????self.keep_prob?=?0.5

????self.num_layers?=?1

????self.pos_size?=?5

????self.pos_num?=?123

????#?the?number?of?entity?pairs?of?each?batch?during?training?or?testing

????self.big_num?=?50

def?__init__(self,?is_training,?word_embeddings,?settings):

????self.num_steps?=?num_steps?=?settings.num_steps

????self.vocab_size?=?vocab_size?=?settings.vocab_size

????self.num_classes?=?num_classes?=?settings.num_classes

????self.gru_size?=?gru_size?=?settings.gru_size

????self.big_num?=?big_num?=?settings.big_num

????self.input_word?=?tf.placeholder(dtype=tf.int32,?shape=[None,?num_steps],?name='input_word')

????self.input_pos1?=?tf.placeholder(dtype=tf.int32,?shape=[None,?num_steps],?name='input_pos1')

????self.input_pos2?=?tf.placeholder(dtype=tf.int32,?shape=[None,?num_steps],?name='input_pos2')

????self.input_y?=?tf.placeholder(dtype=tf.float32,?shape=[None,?num_classes],?name='input_y')

????self.total_shape?=?tf.placeholder(dtype=tf.int32,?shape=[big_num?+?1],?name='total_shape')

????total_num?=?self.total_shape[-1]

????word_embedding?=?tf.get_variable(initializer=word_embeddings,?name='word_embedding')

????pos1_embedding?=?tf.get_variable('pos1_embedding',?[settings.pos_num,?settings.pos_size])

????pos2_embedding?=?tf.get_variable('pos2_embedding',?[settings.pos_num,?settings.pos_size])

????attention_w?=?tf.get_variable('attention_omega',?[gru_size,?1])

????sen_a?=?tf.get_variable('attention_A',?[gru_size])

????sen_r?=?tf.get_variable('query_r',?[gru_size,?1])

????relation_embedding?=?tf.get_variable('relation_embedding',?[self.num_classes,?gru_size])

????sen_d?=?tf.get_variable('bias_d',?[self.num_classes])

????gru_cell_forward?=?tf.contrib.rnn.GRUCell(gru_size)

????gru_cell_backward?=?tf.contrib.rnn.GRUCell(gru_size)

????if?is_training?and?settings.keep_prob?<?1:

????????gru_cell_forward?=?tf.contrib.rnn.DropoutWrapper(gru_cell_forward,?output_keep_prob=settings.keep_prob)

????????gru_cell_backward?=?tf.contrib.rnn.DropoutWrapper(gru_cell_backward,?output_keep_prob=settings.keep_prob)

????cell_forward?=?tf.contrib.rnn.MultiRNNCell([gru_cell_forward]?*?settings.num_layers)

????cell_backward?=?tf.contrib.rnn.MultiRNNCell([gru_cell_backward]?*?settings.num_layers)

????sen_repre?=?[]

????sen_alpha?=?[]

????sen_s?=?[]

????sen_out?=?[]

????self.prob?=?[]

????self.predictions?=?[]

????self.loss?=?[]

????self.accuracy?=?[]

????self.total_loss?=?0.0

????self._initial_state_forward?=?cell_forward.zero_state(total_num,?tf.float32)

????self._initial_state_backward?=?cell_backward.zero_state(total_num,?tf.float32)

????#?embedding?layer

????inputs_forward?=?tf.concat(axis=2,?values=[tf.nn.embedding_lookup(word_embedding,?self.input_word),

???????????????????????????????????????????????tf.nn.embedding_lookup(pos1_embedding,?self.input_pos1),

???????????????????????????????????????????????tf.nn.embedding_lookup(pos2_embedding,?self.input_pos2)])

????inputs_backward?=?tf.concat(axis=2,

????????????????????????????????values=[tf.nn.embedding_lookup(word_embedding,?tf.reverse(self.input_word,?[1])),

????????????????????????????????????????tf.nn.embedding_lookup(pos1_embedding,?tf.reverse(self.input_pos1,?[1])),

????????????????????????????????????????tf.nn.embedding_lookup(pos2_embedding,

???????????????????????????????????????????????????????????????tf.reverse(self.input_pos2,?[1]))])

????outputs_forward?=?[]

????state_forward?=?self._initial_state_forward

????#?Bi-GRU?layer

????with?tf.variable_scope('GRU_FORWARD')?as?scope:

????????for?step?in?range(num_steps):

????????????if?step?>?0:

????????????????scope.reuse_variables()

????????????(cell_output_forward,?state_forward)?=?cell_forward(inputs_forward[:,?step,?:],?state_forward)

????????????outputs_forward.append(cell_output_forward)

????outputs_backward?=?[]

????state_backward?=?self._initial_state_backward

????with?tf.variable_scope('GRU_BACKWARD')?as?scope:

????????for?step?in?range(num_steps):

????????????if?step?>?0:

????????????????scope.reuse_variables()

????????????(cell_output_backward,?state_backward)?=?cell_backward(inputs_backward[:,?step,?:],?state_backward)

????????????outputs_backward.append(cell_output_backward)

????output_forward?=?tf.reshape(tf.concat(axis=1,?values=outputs_forward),?[total_num,?num_steps,?gru_size])

????output_backward?=?tf.reverse(

????????tf.reshape(tf.concat(axis=1,?values=outputs_backward),?[total_num,?num_steps,?gru_size]),?[1])

????#?word-level?attention?layer

????output_h?=?tf.add(output_forward,?output_backward)

????attention_r?=?tf.reshape(tf.matmul(tf.reshape(tf.nn.softmax(

????????tf.reshape(tf.matmul(tf.reshape(tf.tanh(output_h),?[total_num?*?num_steps,?gru_size]),?attention_w),

???????????????????[total_num,?num_steps])),?[total_num,?1,?num_steps]),?output_h),?[total_num,?gru_size])

模型的训练和使用

(1)?模型的训练:

def?main(_):

????#?the?path?to?save?models

????save_path?=?'./model/'

????print('reading?wordembedding')

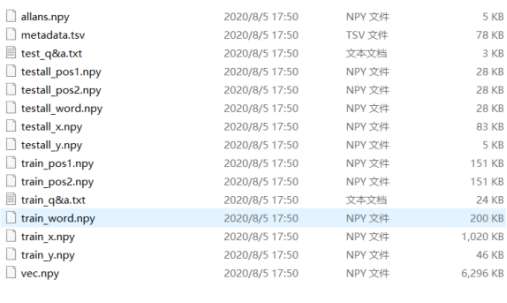

????wordembedding?=?np.load('./data/vec.npy')

????print('reading?training?data')

????train_y?=?np.load('./data/train_y.npy')

????train_word?=?np.load('./data/train_word.npy')

????train_pos1?=?np.load('./data/train_pos1.npy')

????train_pos2?=?np.load('./data/train_pos2.npy')

????settings?=?network.Settings()

????settings.vocab_size?=?len(wordembedding)

????settings.num_classes?=?len(train_y[0])

????big_num?=?settings.big_num

????with?tf.Graph().as_default():

????????sess?=?tf.Session()

????????with?sess.as_default():

????????????initializer?=?tf.contrib.layers.xavier_initializer()

????????????with?tf.variable_scope("model",?reuse=None,?initializer=initializer):

????????????????m?=?network.GRU(is_training=True,?word_embeddings=wordembedding,?settings=settings)

????????????global_step?=?tf.Variable(0,?name="global_step",?trainable=False)

????????????optimizer?=?tf.train.AdamOptimizer(0.0005)

????????????train_op?=?optimizer.minimize(m.final_loss,?global_step=global_step)

????????????sess.run(tf.global_variables_initializer())

????????????saver?=?tf.train.Saver(max_to_keep=None)

????????????merged_summary?=?tf.summary.merge_all()

????????????summary_writer?=?tf.summary.FileWriter(FLAGS.summary_dir?+?'/train_loss',?sess.graph)

????????????def?train_step(word_batch,?pos1_batch,?pos2_batch,?y_batch,?big_num):

????????????????feed_dict?=?{}

????????????????total_shape?=?[]

????????????????total_num?=?0

????????????????total_word?=?[]

????????????????total_pos1?=?[]

????????????????total_pos2?=?[]

????????????????for?i?in?range(len(word_batch)):

????????????????????total_shape.append(total_num)

????????????????????total_num?+=?len(word_batch[i])

????????????????????for?word?in?word_batch[i]:

????????????????????????total_word.append(word)

????????????????????for?pos1?in?pos1_batch[i]:

????????????????????????total_pos1.append(pos1)

????????????????????for?pos2?in?pos2_batch[i]:

????????????????????????total_pos2.append(pos2)

????????????????total_shape.append(total_num)

????????????????total_shape?=?np.array(total_shape)

????????????????total_word?=?np.array(total_word)

????????????????total_pos1?=?np.array(total_pos1)

????????????????total_pos2?=?np.array(total_pos2)

????????????????feed_dict[m.total_shape]?=?total_shape

????????????????feed_dict[m.input_word]?=?total_word

????????????????feed_dict[m.input_pos1]?=?total_pos1

????????????????feed_dict[m.input_pos2]?=?total_pos2

????????????????feed_dict[m.input_y]?=?y_batch

????????????????temp,?step,?loss,?accuracy,?summary,?l2_loss,?final_loss?=?sess.run(

????????????????????[train_op,?global_step,?m.total_loss,?m.accuracy,?merged_summary,?m.l2_loss,?m.final_loss],

????????????????????feed_dict)

????????????????time_str?=?datetime.datetime.now().isoformat()

????????????????accuracy?=?np.reshape(np.array(accuracy),?(big_num))

????????????????acc?=?np.mean(accuracy)

????????????????summary_writer.add_summary(summary,?step)

????????????????if?step?%?50?==?0:

????????????????????tempstr?=?"{}:?step?{},?softmax_loss?{:g},?acc?{:g}".format(time_str,?step,?loss,?acc)

????????????????????print(tempstr)

????????????for?one_epoch?in?range(settings.num_epochs):

????????????????temp_order?=?list(range(len(train_word)))

????????????????np.random.shuffle(temp_order)

????????????????for?i?in?range(int(len(temp_order)?/?float(settings.big_num))):

????????????????????temp_word?=?[]

????????????????????temp_pos1?=?[]

????????????????????temp_pos2?=?[]

????????????????????temp_y?=?[]

????????????????????temp_input?=?temp_order[i?*?settings.big_num:(i?+?1)?*?settings.big_num]

????????????????????for?k?in?temp_input:

????????????????????????temp_word.append(train_word[k])

????????????????????????temp_pos1.append(train_pos1[k])

????????????????????????temp_pos2.append(train_pos2[k])

????????????????????????temp_y.append(train_y[k])

????????????????????num?=?0

????????????????????for?single_word?in?temp_word:

????????????????????????num?+=?len(single_word)

????????????????????if?num?>?1500:

????????????????????????print('out?of?range')

????????????????????????continue

????????????????????temp_word?=?np.array(temp_word)

????????????????????temp_pos1?=?np.array(temp_pos1)

????????????????????temp_pos2?=?np.array(temp_pos2)

????????????????????temp_y?=?np.array(temp_y)

????????????????????train_step(temp_word,?temp_pos1,?temp_pos2,?temp_y,?settings.big_num)

????????????????????current_step?=?tf.train.global_step(sess,?global_step)

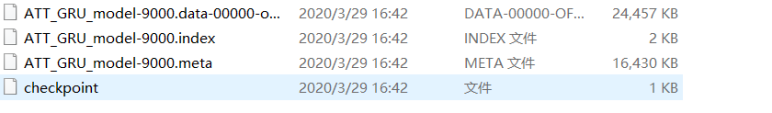

????????????????????if?current_step?>?8000?and?current_step?%?100?==?0:

????????????????????????print('saving?model')

????????????????????????path?=?saver.save(sess,?save_path?+?'ATT_GRU_model',?global_step=current_step)

????????????????????????tempstr?=?'have?saved?model?to?'?+?path

????????????????????????print(tempstr)

(2)?模型的测试:

while?True:

????#try:

????????#BUG:?Encoding?error?if?user?input?directly?from?command?line.

????????line?=?input('请输入中文句子,格式为?"name1?name2?sentence":')

????????#Read?file?from?test?file

????????'''

????????infile?=?open('test.txt',?encoding='utf-8')

????????line?=?''

????????for?orgline?in?infile:

????????????line?=?orgline.strip()

????????????break

????????infile.close()

????????'''

????????en1,?en2,?sentence?=?line.strip().split()

????????print("实体1:?"?+?en1)

????????print("实体2:?"?+?en2)

????????print(sentence)

????????relation?=?0

????????en1pos?=?sentence.find(en1)

????????if?en1pos?==?-1:

????????????en1pos?=?0

????????en2pos?=?sentence.find(en2)

????????if?en2pos?==?-1:

????????????en2post?=?0

????????output?=?[]

????????#?length?of?sentence?is?70

????????fixlen?=?70

????????#?max?length?of?position?embedding?is?60?(-60~+60)

????????maxlen?=?60

????????#Encoding?test?x

????????for?i?in?range(fixlen):

????????????word?=?word2id['BLANK']

????????????rel_e1?=?pos_embed(i?-?en1pos)

????????????rel_e2?=?pos_embed(i?-?en2pos)

????????????output.append([word,?rel_e1,?rel_e2])

????????for?i?in?range(min(fixlen,?len(sentence))):

????????????word?=?0

????????????if?sentence[i]?not?in?word2id:

????????????????#print(sentence[i])

????????????????#print('==')

????????????????word?=?word2id['UNK']

????????????????#print(word)

????????????else:

????????????????#print(sentence[i])

????????????????#print('||')

????????????????word?=?word2id[sentence[i]]

????????????????#print(word)

????????????output[i][0]?=?word

????????test_x?=?[]

????????test_x.append([output])

????????#Encoding?test?y

????????label?=?[0?for?i?in?range(len(relation2id))]

????????label[0]?=?1

????????test_y?=?[]

????????test_y.append(label)

????????test_x?=?np.array(test_x)

????????test_y?=?np.array(test_y)

????????test_word?=?[]

????????test_pos1?=?[]

????????test_pos2?=?[]

????????for?i?in?range(len(test_x)):

????????????word?=?[]

????????????pos1?=?[]

????????????pos2?=?[]

????????????for?j?in?test_x[i]:

????????????????temp_word?=?[]

????????????????temp_pos1?=?[]

????????????????temp_pos2?=?[]

????????????????for?k?in?j:

????????????????????temp_word.append(k[0])

????????????????????temp_pos1.append(k[1])

????????????????????temp_pos2.append(k[2])

????????????????word.append(temp_word)

????????????????pos1.append(temp_pos1)

????????????????pos2.append(temp_pos2)

????????????test_word.append(word)

????????????test_pos1.append(pos1)

????????????test_pos2.append(pos2)

????????test_word?=?np.array(test_word)

????????test_pos1?=?np.array(test_pos1)

????????test_pos2?=?np.array(test_pos2)

?????????prob,?accuracy?=?test_step(test_word,?test_pos1,?test_pos2,?test_y)

????????prob?=?np.reshape(np.array(prob),?(1,?test_settings.num_classes))[0]

????????print("关系是:")

????????#print(prob)

????????top3_id?=?prob.argsort()[-3:][::-1]

????????for?n,?rel_id?in?enumerate(top3_id):

????????????print("No."?+?str(n+1)?+?":?"?+?id2relation[rel_id]?+?",?Probability?is?"?+?str(prob[rel_id]))

更多精彩推荐

?无代码火了,短板有哪些? ?写不出满分作文怎么办,GPT-3 来帮你 ?互联网不相信学渣 ?收藏!美国博士明确给出Python的高效学习技巧 ?垃圾回收策略和算法,看这篇就够了 ?2020 以太坊技术及应用大会·中国圆满落幕,大咖们的演讲精华都在这里了! 点分享 点点赞 点在看

关注公众号:拾黑(shiheibook)了解更多

[广告]赞助链接:

四季很好,只要有你,文娱排行榜:https://www.yaopaiming.com/

让资讯触达的更精准有趣:https://www.0xu.cn/

![张柒柒Ech Omo 今天的妆有点干净[doge] #motd##好物分享# ](https://imgs.knowsafe.com:8087/img/aideep/2023/4/1/117ead57afdbc42325761ded410e4966.jpg?w=250)

CSDN

CSDN

关注网络尖刀微信公众号

关注网络尖刀微信公众号